AI test generation

Generate a full draft test from a single prompt

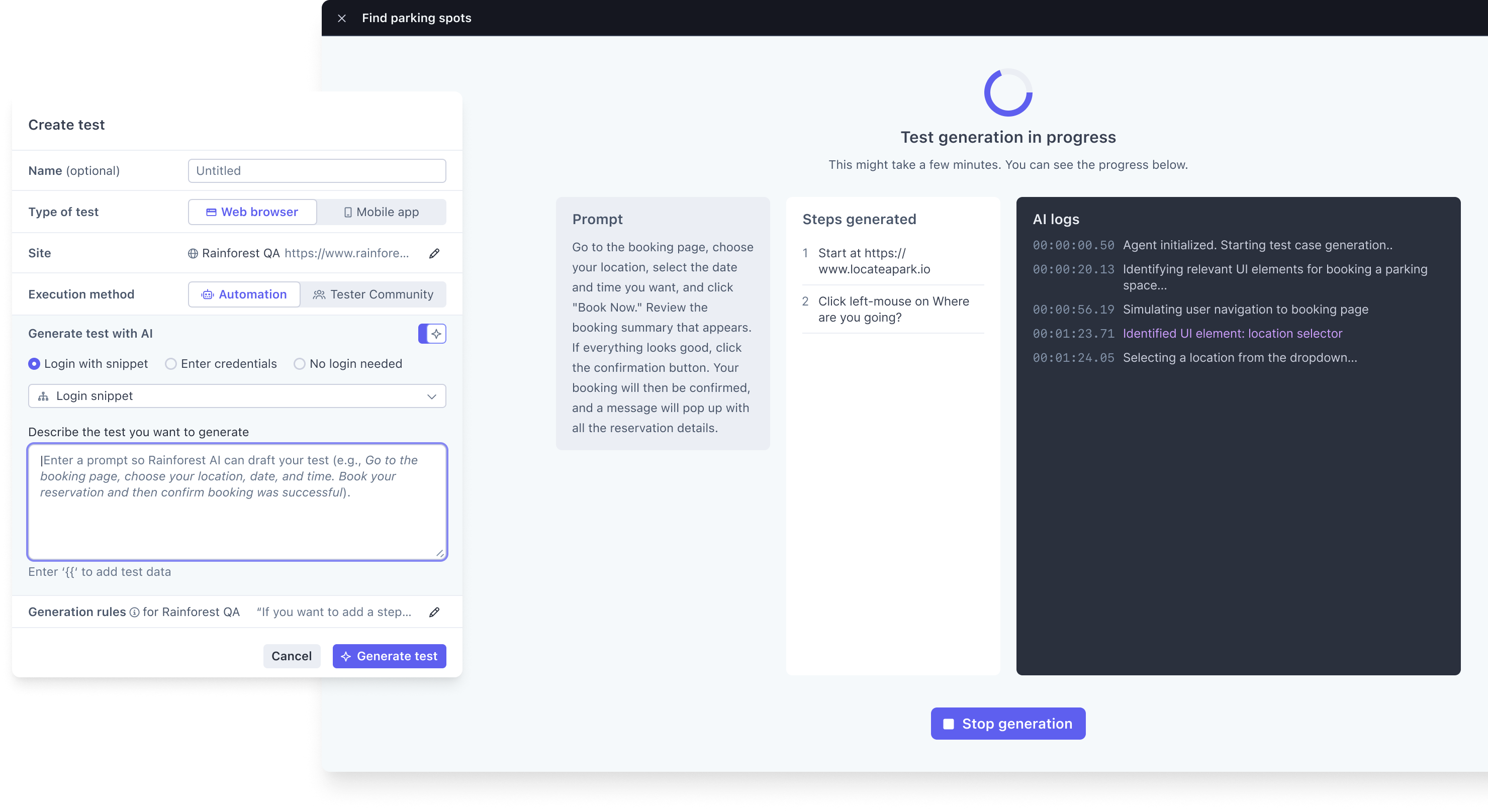

Overview

Rainforest can generate draft tests for you based on a prompt and the credentials. It's important to note that the test generated may not be perfectly set up to run without any modifications, but it can save you 80-90% of the time it would take to write a test by doing most of the heavy lifting, and by allowing multiple requests to work in parallel.

How it works:

- Choose a login/setup snippet or credentials If your test involves logging into your app or any initial setup, you can bypass this by using a snippet. When we start generation, we'll execute the snippet first and then the agents will take over. This should make generation faster and save you the trouble of swapping in that snippet later. If you don't have any snippets created yet, just add credentials directly.

- Write a prompt. Describe the goal(s) of the test case. Don't worry about capturing every detail, but use enough context so someone new to your app would understand what to do.

- Generate the test. Our AI agents will spin up a virtual machine and break down your prompt into subtasks based on interacting with the app's UI, just like a human tester would. They’ll continue to add actions and UI elements until they’ve completed the task included in your prompt.

- Review and refine. In a few minutes, you'll have a draft that you'll be able to add assertions, swap in test data or snippets, and set it to run—saving significant time compared to writing tests manually.

Limitations:

- Test generation can only use snippets to set up a test scenario: Generated tests can only use an existing snippet at the beginning of the test. All of the generated test steps are flat. You can swap in snippets or create snippets after the draft is generated. We hope to add support for calling existing snippets in the middle of a test down the road.

- Test generation cannot determine what should be a conditional: The generator writes steps for what appears on the virtual machine, so the agents won't be able to write steps for the counterfactual scenario that is not appearing at the time of generation. You can go back and make any of those steps conditional as needed.

- Test generation can only be done on a Chrome on Windows virtual machine: If you need to create tests for other platforms, create the test on Chrome/Windows, change the VM, and make any adjustments to get it to pass on those platforms. This should still save you time vs writing from scratch.

Tips and tricks

- Write instructions for the agents like you'd write for an assistant without much context: The agents are adept at deciphering common UI patterns, but if there are app-specific idiosyncrasies, it's best to include them in your prompt or site rules. This will make generation more reliable.

- Use test data: test generation can call previously saved test data using curly brackets. When the steps generate, they reference that data rather than the resolved values.

- Use site rules, especially for basic information about your app: If you find yourself writing the same instructions or context in all your prompts, add these to the site-specific rules for generation. We'd specifically recommend giving the agents context about the purpose of your app (like you'd do for a QA assistant). This can only help with accuracy.

- Be careful if writing many details into your prompt; the more detailed your instructions, the more room for error: The agents will take your instructions at face value; if have an instruction to "click the create new user button," and that button that doesn't exist, they'll get sidetracked trying to find it. If you're writing at a higher level (e.g., "Add a new user to the account"), they'll have more flexibility to accomplish the task.

Updated 4 months ago